As the demand for advanced machine learning models skyrockets, so does the environmental footprint of training and deploying these systems. Training large-scale neural networks can consume vast amounts of electricity and contribute to greenhouse gas emissions. This blog post dives into the emerging field of Green AI, outlining why sustainability matters, key strategies to reduce energy consumption, and how developers and organizations can adopt eco-friendly practices to build a greener future with artificial intelligence.

1. Understanding Green AI

Green AI refers to the practice of designing, developing, and deploying machine learning models in a way that minimizes their environmental impact. Instead of focusing solely on metrics like accuracy or F1-score, Green AI emphasizes the importance of computational efficiency, energy consumption, and carbon footprint as critical evaluation criteria. By balancing performance with sustainability, researchers and engineers can create models that are both powerful and planet-friendly.

2. Why Sustainability Matters in AI

- Energy Consumption: Training state-of-the-art models like GPT-3 can consume as much energy as several cars over their lifetimes. Reducing compute requirements directly lowers electricity use.

- Carbon Emissions: Depending on the energy mix of data centers, AI workloads can emit significant volumes of CO₂. Prioritizing low-carbon energy sources and efficient hardware helps curb these emissions.

- Cost Savings: Efficient training and inference not only benefit the environment but also reduce operational expenses, making AI more accessible to smaller organizations.

- Regulatory Compliance: As governments worldwide propose stricter emissions regulations, adopting Green AI practices positions companies ahead of policy changes.

3. Strategies for Energy-Efficient Machine Learning

Multiple approaches can help reduce the energy footprint of AI systems without sacrificing performance. Below are some proven strategies:

3.1 Model Compression and Pruning

Large deep learning models often contain redundant parameters. Techniques such as weight pruning, where less important connections are removed, and quantization, where parameter precision is reduced from 32-bit floats to 8-bit integers, can dramatically shrink model size. Smaller models require less compute and memory bandwidth, translating to lower energy consumption during training and inference.

3.2 Efficient Architecture Design

Designing neural network architectures with a focus on efficiency has become a major research trend. Examples include MobileNet, EfficientNet, and Transformer-lite variants. These models use optimized building blocks, attention mechanisms, and depthwise separable convolutions to deliver competitive accuracy while reducing the number of operations required.

3.3 Transfer Learning and Fine-Tuning

Training models from scratch is energy-intensive. Transfer learning leverages pre-trained models and fine-tunes them on specific tasks using substantially less data and compute. This approach lowers the overall training cost while still achieving high performance in domain-specific applications.

3.4 Smart Hyperparameter Optimization

Hyperparameter search can be a compute-hungry process if naively exploring large parameter grids. Implementing Bayesian optimization, evolutionary strategies, or early-stopping rules minimizes wasted compute by quickly identifying optimal settings and halting unpromising experiments.

4. Sustainable Hardware and Infrastructure

Beyond algorithmic improvements, choosing the right hardware and data center infrastructure is crucial for Green AI.

4.1 Low-Power GPUs and TPUs

Modern accelerators from leading vendors now offer specialized chips designed for energy efficiency. Google Cloud TPUs, NVIDIA Ampere GPUs, and custom AI ASICs can deliver higher throughput per watt compared to general-purpose hardware.

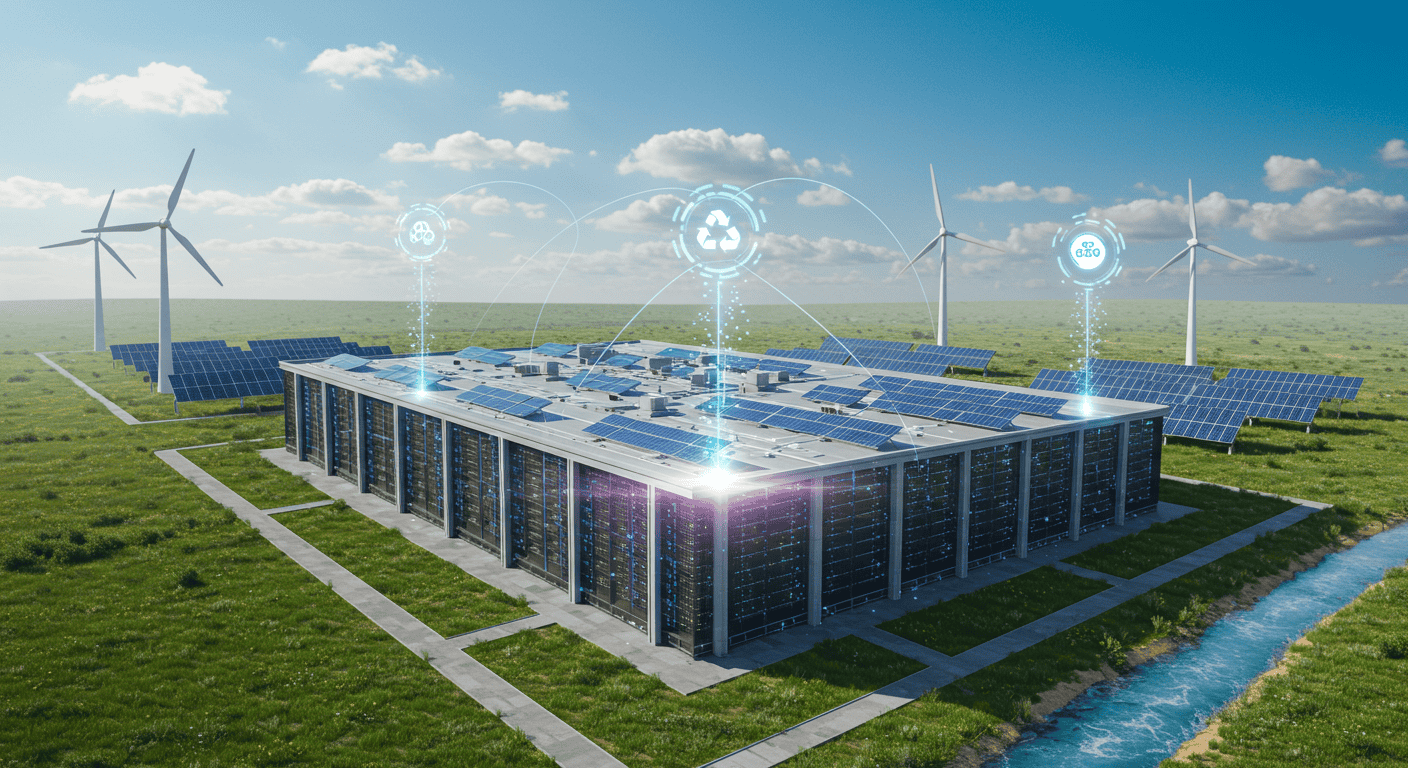

4.2 Renewable Energy-Powered Data Centers

Many cloud providers are committing to carbon-neutral or even carbon-negative operations by sourcing electricity from solar, wind, or hydroelectric power. By choosing these green regions for compute-intensive tasks, organizations can significantly reduce indirect emissions associated with AI workloads.

4.3 Edge Computing and On-Device AI

Offloading inference from the cloud to edge devices like smartphones, IoT sensors, and embedded systems reduces data transmission overhead and central data center load. Lightweight models optimized for on-device execution can operate on lower-power processors and contribute to overall energy savings.

5. Measuring and Reporting Carbon Footprint

Transparent reporting of energy use and carbon emissions helps organizations track progress and set reduction targets. Tools and frameworks to measure AI carbon footprint include:

- ML CO₂ Impact: An open-source toolkit that estimates the carbon emissions of training and inference by accounting for hardware specs, region energy mix, and runtime duration.

- Experiment Tracking Platforms: Services like Weights & Biases and Comet integrate energy logging to correlate model performance with resource consumption.

- Internal Dashboards: Custom dashboards that aggregate GPU utilization, power draw, and cloud region emission factors provide real-time insights for data science teams.

6. Industry Use Cases of Green AI

Early adopters across various sectors are demonstrating the benefits of sustainable AI:

- Healthcare: Lightweight neural networks for medical image analysis deployed on edge devices in rural clinics reduce reliance on centralized, power-hungry servers.

- Agriculture: Energy-efficient pest detection models run on solar-powered drones to minimize battery usage and extend flight times.

- Finance: Pruned and quantized models for fraud detection operate faster and cut compute costs in high-volume transaction processing.

- Smart Cities: Edge AI cameras with compressed vision models monitor traffic flow and air quality, reducing data transfer and cloud processing requirements.

7. Challenges and Future Directions

While Green AI makes significant strides, challenges remain:

- Benchmark Standardization: Establishing industry-wide benchmarks that factor in energy metrics alongside accuracy is still an evolving area.

- Trade-Offs: Striking the right balance between model size, speed, and predictive performance requires new research into multi-objective optimization.

- Accessibility of Tools: Wider adoption demands user-friendly libraries and platforms that automatically optimize for energy efficiency.

Looking ahead, innovations such as neuromorphic chips, algorithmic lottery tickets, and carbon-aware schedulers promise to further reduce the environmental impact of AI while unlocking new application domains.

Conclusion

Green AI represents a pivotal shift in the AI community’s mindset—championing sustainability as a core design principle. By adopting model compression, efficient architectures, renewable infrastructure, and transparent carbon accounting, organizations can deliver cutting-edge intelligence while safeguarding the planet. As the field matures, collaboration between researchers, cloud providers, and policymakers will be key to standardizing best practices and technologies that enable truly eco-friendly AI solutions.

Ready to make your next AI project eco-friendly? Start by measuring your model’s energy footprint today, experiment with lightweight architectures, and choose renewable-powered compute regions. Together, we can build a smarter and greener tomorrow.

Learn more about:

Leave a Reply