In today’s data-driven world, high-quality datasets power breakthroughs in machine learning and AI. Yet collecting real-world data often runs into roadblocks: privacy regulations, high acquisition costs, and potential bias. AI-driven synthetic data generation offers a compelling solution. By creating realistic, artificial datasets that mimic the statistical properties of real data, organizations can train models at scale while preserving privacy and reducing costs. In this post, we explore the fundamentals, techniques, and best practices for harnessing synthetic data generation to fuel your next AI project.

What Is Synthetic Data?

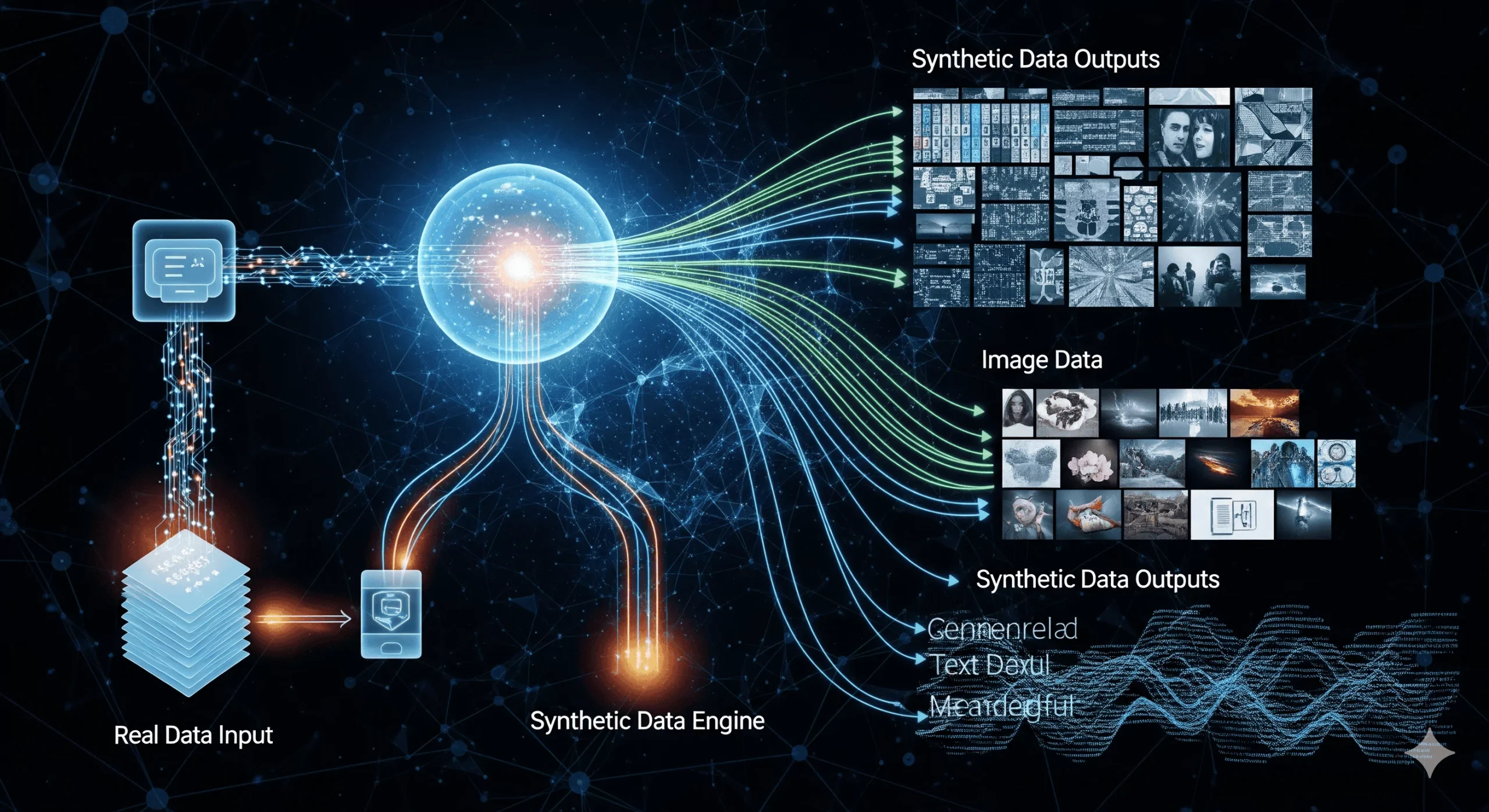

Synthetic data is artificially generated information that replicates the structure, patterns, and correlations of real datasets without exposing sensitive details. Unlike anonymized data, synthetic data has no direct one-to-one correspondence with any individual record. It can take many forms, including tabular data, images, time series, text, and audio. By leveraging generative AI models—such as Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and diffusion models—data scientists can produce vast quantities of high-fidelity data tailored to specific use cases.

Why Synthetic Data Matters

Synthetic data addresses several challenges that hinder AI adoption:

- Privacy by Design: With no real personal data, organizations can avoid regulatory pitfalls and minimize breach risks.

- Scalability: Generate unlimited samples to augment limited datasets or balance class imbalances.

- Cost Efficiency: Eliminate expensive data collection campaigns, especially in specialized domains like healthcare or autonomous driving.

- Bias Mitigation: Control distribution properties to reduce sampling bias and improve model fairness.

Key Techniques for Generating Synthetic Data

Several approaches enable synthetic data creation, each with strengths and tradeoffs:

- Rule-Based Generation: Uses domain knowledge and statistical rules to generate structured tabular data. Fast and interpretable but may lack high-dimensional realism.

- Simulation & Agent-Based Models: Employs physics engines or virtual environments—common in autonomous vehicle and robotics training.

- Generative Adversarial Networks (GANs): Two-network frameworks that produce highly realistic images and time-series data. Require careful tuning to avoid mode collapse.

- Variational Autoencoders (VAEs): Encode real samples into latent representations and decode to generate new data. Offer stable training but sometimes blur fine details.

- Diffusion Models: Recent state-of-the-art for image and audio, gradually denoising random noise into detailed samples. Computationally heavy but yield stunning fidelity.

Real-World Use Cases

Organizations across industries leverage synthetic data to overcome data scarcity and privacy constraints:

- Healthcare: Generate patient records and medical images for diagnostic model training without exposing PHI.

- Autonomous Vehicles: Simulate diverse driving scenarios—weather, lighting, traffic—to enrich road-scene datasets.

- Finance: Create transaction histories for fraud detection models, ensuring no real customer data is used.

- Retail & Marketing: Produce synthetic customer profiles for personalization engines and A/B testing.

Balancing Quality, Privacy, and Utility

Generating synthetic data involves tradeoffs between realism, privacy guarantees, and downstream utility. Key considerations include:

- Privacy Metrics: Techniques like differential privacy can be integrated to quantify privacy leakage risk.

- Statistical Fidelity: Use distance metrics (e.g., KL divergence, Wasserstein distance) to compare distributions of real and synthetic data.

- Task-Specific Utility: Evaluate synthetic data by training models and measuring performance on real test sets.

- Diversity vs. Overfitting: Ensure generative models do not memorize training samples, risking privacy and limiting creativity.

Implementation Best Practices

To maximize the value of synthetic data, follow these steps:

- Define Objectives: Clarify whether you need data augmentation, privacy preservation, or bias correction.

- Curate Base Data: Start with a representative sample of real data to train your generative models.

- Select Appropriate Models: Match the technique—GAN, VAE, simulator—to your data type and quality needs.

- Iterate & Validate: Continuously assess realism and privacy metrics, refining model hyperparameters.

- Integrate Seamlessly: Build pipelines that mix real and synthetic data, version control datasets, and automate retraining.

Ethical and Regulatory Considerations

While synthetic data offers privacy benefits, ethical and legal frameworks still apply. Key considerations include:

- Regulatory Compliance: Confirm that synthetic data usage aligns with GDPR, HIPAA, or other regional laws.

- Bias Amplification: Ensure synthetic datasets do not perpetuate historical biases present in the training data.

- Transparency: Document generation processes so stakeholders understand data provenance and limitations.

- Security Risks: Safeguard generative models to prevent malicious actors from extracting sensitive information.

Challenges and Future Directions

Synthetic data is advancing rapidly, yet hurdles remain:

- Model Complexity: Training state-of-the-art diffusion models demands significant compute resources.

- Quality Assurance: Automating the validation of synthetic datasets at scale is still an open research problem.

- Standardization: Industry-wide benchmarks and toolkits are needed to compare and certify synthetic data quality.

- Interdisciplinary Collaboration: Combining domain experts, data scientists, and ethicists will shape responsible adoption.

Conclusion

AI-driven synthetic data generation is reshaping how organizations approach privacy, scalability, and model performance. By carefully selecting generation techniques, validating quality, and adhering to ethical guidelines, you can unlock the full potential of synthetic datasets to train robust, fair, and privacy-preserving AI systems. As the field matures, expect to see new standards, tools, and best practices emerge—positioning your team at the forefront of responsible data innovation.

Frequently Asked Questions (FAQs)

1. Is synthetic data truly private?

When generated correctly with privacy-enhancing methods like differential privacy and without memorizing real samples, synthetic data carries minimal risk of reidentification.

2. How do I evaluate the quality of synthetic data?

Use statistical distance measures (e.g., Wasserstein distance), privacy metrics, and downstream model performance tests to ensure synthetic data meets your requirements.

3. Can synthetic data replace real data entirely?

In most cases, synthetic data complements rather than replaces real data. Mixing both improves robustness, but critical edge-cases often still require real samples.

4. What tools and platforms support synthetic data generation?

Popular open-source libraries include SDV (Synthetic Data Vault), Synthpop, and frameworks like TensorFlow GAN and PyTorch Diffusion. Commercial platforms also offer enterprise-grade solutions.

5. What’s next for synthetic data?

Expect tighter integration with MLOps pipelines, stronger privacy guarantees, and standardized benchmarks to drive broader adoption across regulated industries.

Learn more about: AI-Driven Cybersecurity: Proactive Threat Detection and Defense Strategies

Leave a Reply